# Docker

# 官网

:::注 有状态容器不适合docker部署 :::

# 镜像下载tar

nginx

wget http://singlecloud.cfsingle.fun/dockerimages/x86/nginx_v1.25.1.tar

openjdk8

wget http://singlecloud.cfsingle.fun/dockerimages/x86/openjdk8.tar

mysql5.7.44

wget http://singlecloud.cfsingle.fun/dockerimages/x86/mysql_v5.7.44.tar

rabbitmq3.12.3

wget http://singlecloud.cfsingle.fun/dockerimages/x86/rabbitmq3.12.13-management.tar

pgsql16.3

wget http://singlecloud.cfsingle.fun/dockerimages/x86/postgres_v16.3.tar

redis7.2.2

wget http://singlecloud.cfsingle.fun/dockerimages/x86/redis_v7.2.2.tar

frps_v0.59

wget http://singlecloud.cfsingle.fun/dockerimages/x86/frps_amd64_v0.59.tar

# 安装

# yum方式安装

DANGER

使用yum直接这样安装的docker不是最新版本

yum install -y docker

# 二进制安装

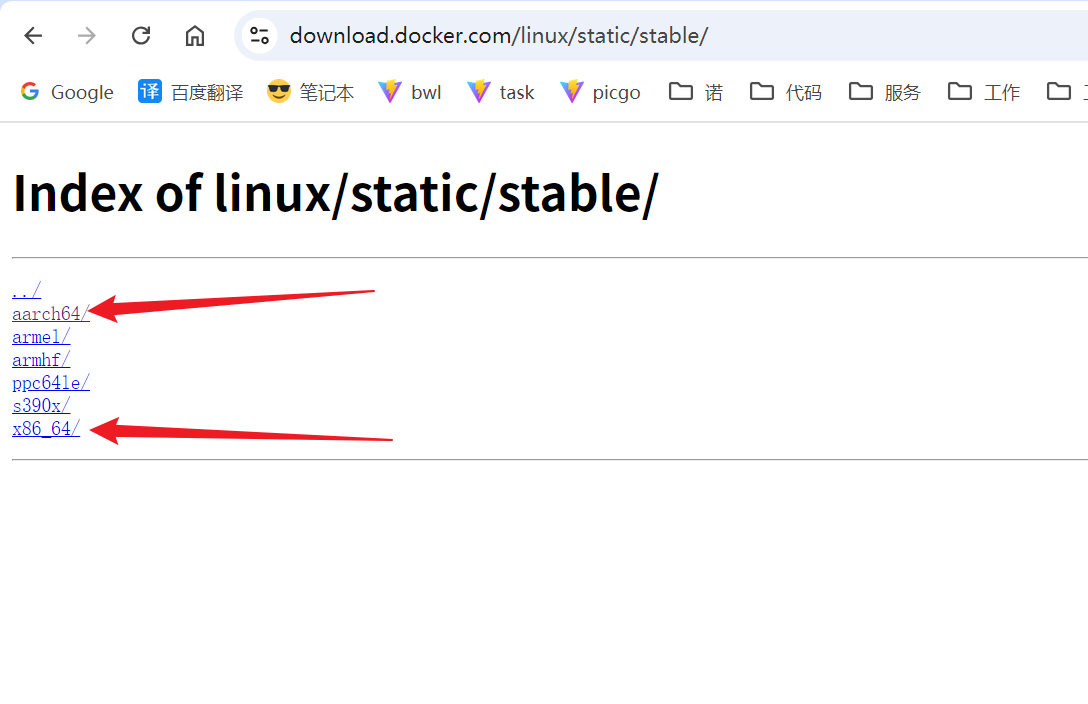

1、下载安装包

https://download.docker.com/linux/static/stable/

七牛云文件地址

wget http://singlecloud.cfsingle.fun/software/docker-26.1.3.tgz

2、解压安装包

tar -zxvf docker-26.1.3.tgz

3、将解压后的 Docker 文件移到 /usr/bin 目录下

sudo cp docker/* /usr/bin/

4、将 Docker 注册为系统服务

vim /etc/systemd/system/docker.service

[Unit]

Description=Docker Application Container Engine

Documentation=https://docs.docker.com

After=network-online.target firewalld.service

Wants=network-online.target

[Service]

Type=notify

ExecStart=/usr/bin/dockerd

ExecReload=/bin/kill -s HUP $MAINPID

LimitNOFILE=infinity

LimitNPROC=infinity

TimeoutStartSec=0

Delegate=yes

KillMode=process

Restart=on-failure

StartLimitBurst=3

StartLimitInterval=60s

[Install]

WantedBy=multi-user.target

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

5、给文件增加可执行权限

chmod +x /etc/systemd/system/docker.service

6、重新加载配置文件

systemctl daemon-reload

7、设置开机自启动

systemctl enable docker.service

8、启动docker

systemctl start docker

# 问题

docker 启动跟防火墙,有很多关系 很多时候修改了配置都要重启docker 才生效

# 配置文件

vim /etc/docker/daemon.json

systemctl daemon-reload

systemctl restart docker

2

{

"log-driver": "json-file",

"log-opts": {

"max-size": "100m",

"max-file": "3"

},

"registry-mirrors": [

"https://hub.uuuadc.top",

"https://docker.anyhub.us.kg",

"https://dockerhub.jobcher.com",

"https://do.nark.eu.org",

"https://dc.j8.work",

"https://docker.m.daocloud.io",

"https://dockerproxy.com",

"https://mirror.ccs.tencentyun.com",

"https://i1el1i0w.mirror.aliyuncs.com",

"https://hub-mirror.c.163.com",

"https://registry.aliyuncs.com",

"https://registry.docker-cn.com",

"https://docker.mirrors.ustc.edu.cn"

],

"experimental": true,

"features": {

"buildkit": true

}

}

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

# 系统命令

启动:systemctl start docker

停止:systemctl stop docker

重启:systemctl restart docker

查看docker状态:systemctl status docker

开机启动:systemctl enable docker

查看docker概要信息:docker info

查看docker帮助文档:docker --help

查看docker具体命令的帮助文档:docker 具体命令 --help

2

3

4

5

6

7

8

# 常用命令

docker stop $(docker ps -q) 停止所有的正在运行的容器

docker restart $(docker ps -q) 重启全部容器

docker search nginx -- 查看 可以下载

dockers images --查看本地镜像

docker ps --查看运行中的容器

docker start name 运行容器

docker stop name 停止容器运行

docker update --restart=always 容器id #该参数

docker pull ${CONTAINER NAME} #拉取镜像

docker ps -a #查看所有正在运行的容器,加-q返回id

docker rm ${CONTAINER NAME/ID} #删除容器

docker exec -it nginx /bin/bash #进入容器

docker inspect name #查看容器信息

docker restart naem #重启容器

docker cp 容器名称:path path本机路径 # name容器在容器内拷贝文件到本地

docker logs name #查看日志

docker logs name -f # 一直

docker system df # 查看docker占用情况

--storage-opt size=2G # 设置docker容器占用磁盘的最大值

docker image inspect (docker image名称):latest|grep -i version # 查看latest版本

docker inspect --format='{{.Created}}' 容器id # 查看容器的创建时间

docker search activemq 查看下载

docker rmi ${IMAGE NAME/ID} 停止并删除容器

docker logs --tail 10 name # 查看后10条日志

--network host 网络模式,所有容器端口都对应属主机端口,不存在映射关系。

--restart=always # docker重启 该容器也重启

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

架构查看

docker inspect imagesId | grep Architecture

# 镜像导出

对于基础软件镜像,建议直接从官网下载tar包,在服务器下载镜像,保存的tar包,有的时候会有破损,无法使用

提示:不要用镜像id进行导出,否者导入的时候会不显示镜像名称

docker save > nginx.tar nginx:latest

# 镜像导入

docker load < nginx.tar

# 目录说明

builder 构建docker镜像的工具或过程

buildkit 用于构建和打包容器镜像,官方构建引擎,支持多阶段构建、缓存管理、并行化构建和多平台构建等功能

containerd 负责容器生命周期管理,能起、停、重启,确保容器运行。负责镜管理,提供一致的运行环境

containers (docker容器运行目录,id和容器id一致)docker容器,当前运行的所有容器都会显示到此目录

image 在docker中,image是一个只读的文件系统,可被看作一个模板,用于创建容器实例

network docker网络提供一种简单、可扩展方式来组织管理容器。可为网络提供网络隔离、服务发现和负载均衡等

overlay2 存储驱动,用于实现容器与主机之间文件系统层的隔离。允许多个容器共享相同的基础文件系统镜像,又能保证每个容器保持独立的文件系统更改。df命令可以列举容器运行中所需文件和目录。所谓的联合挂载

plugins docker插件,用于扩展docker

runtimes docker默认使用runc作为容器运行时

swarm docker集群管理工具,用于将多个docker组织成一个集群,以便统一管理和调度

tmp 临时文件夹,用于存放临时文件或数据

trust 在Docker中,信任(trust)通常与内容信任(Content Trust)相关。确保镜像在传输过程中未被篡改

volumes (容器数据卷)docker卷是容器可以从中读取和写入的特定文件类型层,卷为持久化存储和共享数据,即使容器停止和删除(运行后的容器都会在次目录下自动创建相应的id数据目录)

2

3

4

5

6

7

8

9

10

11

12

13

排查占用情况

du -sh * 获取改目录下每个文件夹的占用大小

docker system df

docker system prune

docker inspect -f '{{ .Mounts }}' 容器名或ID

2

3

4

docker system df

Images:所有镜像占用的空间,包括拉取下来的镜像,和本地构建的。

Containers:运行的容器占用的空间,表示每个容器的读写层的空间。

Local Volumes:容器挂载本地数据卷的空间。

Build Cache:镜像构建过程中产生的缓存空间(只有在使用 BuildKit 时才有,Docker 18.09 以后可用)

2

3

4

5

# docker占用情况说明

# 镜像占用

docker images 查看镜像文件

docker rmi -f 镜像id 删除镜像

2

3

# 容器占用

容器是基于镜像打包好的一个可运行的系统。当我们在创建一个容器的时候下面两个目录就会出现数据

/var/lib/docker/containers/ID 目录所有日志都会以JSON形式保存到本机的此目录下。

/var/lib/docker/overlay2 【文件系统】基于容器文件系统保存的数据会写到本机的此目录下,

2

3

4

# volumes挂载占用

volumes数据卷,就是把容器内的数据连接到主机上的地址。

docker inspect 容器id可以查看容器挂载的目录在哪里

在对应的Mounts下面可以看到 "Destination" 是容器内目录 "Source" 是主机的目录地址

2

3

# 问题

# 数据卷占用太大

这种一般是docker容器运行时没有限制日志导致的

使用docker命令查询与相关容器的数据卷,找到后重新部署下就可以了,删除掉旧容器,重新创建运行

# 无法创建容器

docker: Error response from daemon: failed to create task for container: failed to create shim task: OCI runtime create failed: container_linux.go:318: starting container process caused “permission denied”: unknown 解决方案:yum remove podman,删除podman,重启docke

yum remove podman

systemctl restart docker

2

docker inspect 容器id

# 不分配终端,执行命令

docker exec nginx nginx -t

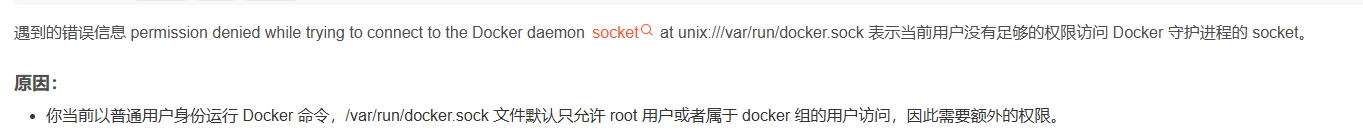

# 权限问题

3种解决方案:

1.使用sudo命令

2.将当前用户添加到docker组

3.更改 /var/run/docker.sock 的权限 (推荐)

sudo chmod 666 /var/run/docker.sock

# 时间不一致问题

启动容器时添加时区参数

-e TZ=Asia/Shanghai

# 容器日志

先创建file.txt

--将最近225分钟的日志写到file.txt文件

docker logs --since 225m 4261ce7f5c14 >>file.txt

-- 保存某个时间段的日志

docker logs --since='2022-01-14T00:58:00' --until='2022-01-14T01:00:00' name >> file.log

2

3

4

5

# 倒序查看容器日志

docker logs -f -t --tail=500 容器id

# 自启动问题

--restart=always # 表示容器退出时,docker会总是自动重启这个容器

--restart=on-failure:3 #(一般采用这个,手动停止不重启) 表示容器的退出状态码非0(非正常退出),自动重启容器,3是自动重启的次数。超过3此则不重启

--restart=no # 默认值,容器退出时,docker不自动重启容器

--restart=unless-stopped # 表示容器退出时总是重启,但是不考虑docker守护进程运行时就已经停止的容器

2

3

4

# 修改自启动

docker container update --restart=always 容器名字

# 限制cpu和内存

例如,让我们将容器可以使用的内存限制为512mb

docker run -m 512m nginx

我们还可以设置一个软限制或者叫保留,当docker检测到主机内存不足时激活:

docker run -m 512m --memory-reservation=256m nginx

默认情况下,访问主机的CPU是无限制的,我们可以使用CPUs参数设置cpu限制。例如,让我们约束容器最多使用两个cpu:

docker run --cpus=2 nginx

我们还可以指定CPU分配的优先级。默认值是1024,数字越高优先级越高:

docker run --cpus=2 --cpu-shares=2000 nginx

2

3

4

5

6

7

8

9

10

# dockerFile文件add和copy区别

copy 不可以使用绝对路径,否者打包镜像时会找不到目录

ADD:将宿主机目录下的文件拷贝进镜像且ADD命令会自动处理URL和解压tar压缩包

2

3

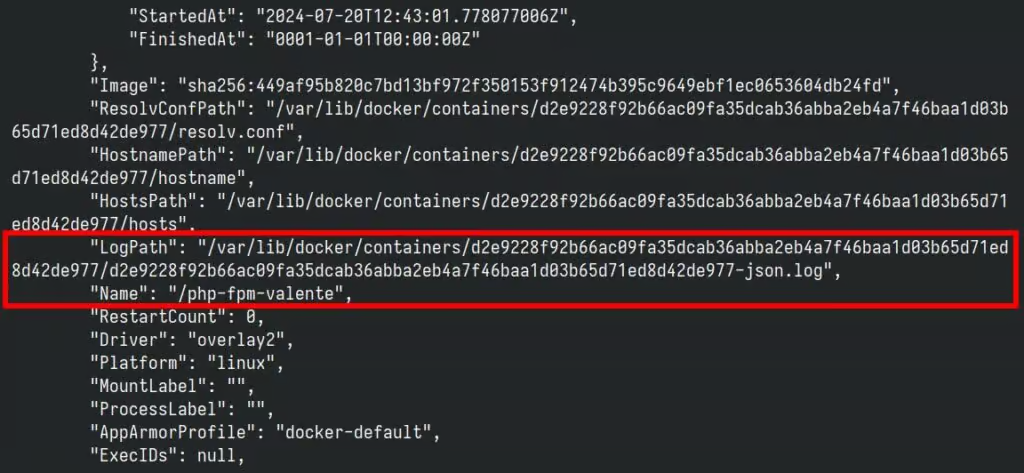

# docker 日志磁盘占用问题

Docker容器是无状态的,这意味着当容器被重新创建时,它们的日志会被删除。但许多人忽略了这里的一个关键细节。所以,在我们继续之前,让我们澄清一个重要的问题。

du -hs * | sort -rh | head -n 10

docker 日志目录

/var/lib/docker/containers/<container-id>/<container-id>-json.log

找出从大到小排序的所有docker容器日志

find /var/lib/docker/containers/ -name "*json.log" | xargs du -h | sort -hr

根据日志文件名称获取容器名称

docker inspect --format='{{.Name}}' <container_id>

docker inspect --format='{{.LogPath}}' <container_name>

2

也可以使用 docker inspect 容器获取详情信息

清理单个docker日志文件

truncate -s 0 /var/lib/docker/containers/d2e9228f92b66ac09fa35dcab36abba2eb4a7f46baa1d03b65d71ed8d42de977/d2e9228f92b66ac09fa35dcab36abba2eb4a7f46baa1d03b65d71ed8d42de977-json.log

清理所有的docker日志文件

truncate -s 0 /var/lib/docker/containers/*/*-json.log

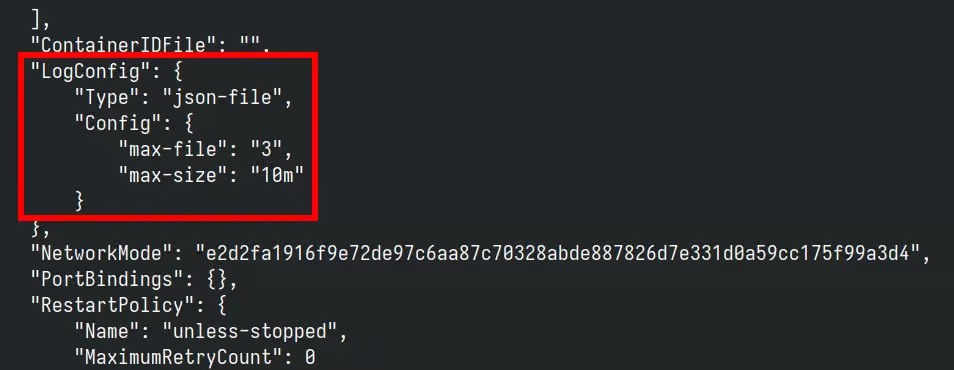

永久解决日志问题

vim /etc/docker/daemon.json

{

"log-driver": "json-file",

"log-opts": {

"max-size": "10m",

"max-file": "3"

}

}

让我来解释一下它的作用。简而言之,它将日志驱动程序设置为“json文件”,将单个Docker日志文件的最大允许大小设置为10兆字节,并允许最多存档3个版本的文件。

2

3

4

5

6

7

8

修改完后,重启docker服务,重要的是要记住,这些更改只会影响新创建的Docker容器,而不会影响已经运行的容器。要将更改应用于现有更改,您必须先删除它们,然后再重新创建。你可以这样做。

查看有没有生效使用 docker inspect <container_name> 命令

# Harbor

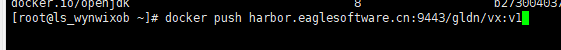

# 镜像打标签

docker tag SOURCE_IMAGE[:TAG] harbor.eaglesoftware.cn:9443/gldn/REPOSITORY[:TAG]

harbor

# 推送镜像

docker push harbor.eaglesoftware.cn:9443/gldn/REPOSITORY[:TAG]

# 应用部署

xxl-job

docker pull xuxueli/xxl-job-admin:2.3.1

minio

docker pull minio/minio:RELEASE.2024-07-31T05-46-26Z

2

3

4

5

6

# neo4j部署

docker run -d --name neo4j --network host -v /data/neo4j/data:/data -v /data/neo4j/logs:/logs -v /data/neo4j/conf:/var/lib/neo4j/conf -v /data/neo4j/import:/var/lib/neo4j/import --env NEO4J_AUTH=neo4j/eagleneo4j neo4j

占用端口为7474和7687端口

# screego

docker run --net=host -e SCREEGO_EXTERNAL_IP=121.43.149.217 ghcr.io/screego/server:1.10.0

# sentinel(1.8.0)

docker run --restart always --name sentinel --network host bladex/sentinel-dashboard:latest

# elasticsearch

修改linux进程创建的线程数

cat /proc/sys/vm/max_map_count

sysctl -w vm.max_map_count=262144

docker pull elasticsearch:7.1.0

创建挂载路径 注:要开启777权限 chmod -R 777 文件路径

mkdir -p /data/server/elasticsearch/config

mkdir -p /data/server/elasticsearch/data/

mkdir -p /data/server/elasticsearch/plugins

echo "http.host: 0.0.0.0" >> /data/server/elasticsearch/config/elasticsearch.yml

2

3

4

5

docker run --name elasticsearch --network host -e "discovery.type=single-node" -e ES_JAVA_OPTS="-Xms512m -Xmx512m" -v /data/server/elasticsearch/config/elasticsearch.yml:/usr/share/elasticsearch/config/elasticsearch.yml -v /data/server/elasticsearch/data/:/usr/share/elasticsearch/data -v /data/server/elasticsearch/plugins:/usr/share/elasticsearch/plugins -d elasticsearch:7.1.0

# nacos(2.2.3)

如果配置一切正确,启动mysql的时候报错,显示 No DataSource set 错误,换个数据库试试,有可能是ip问题,具体原因没有找到... NACOS_AUTH_ENABLE 开启授权认证,并设置相关秘钥,客户端连接的时候要配置相关用户名和密码 默认账号密码 nacos/nacos 2.0版本默认占用端口 8848,9848,9849 9848:客户端grpc请求服务端口 9849:服务端grpc请求端口

docker run \

-e MODE=standalone \

-e NACOS_AUTH_ENABLE=true \

-e NACOS_AUTH_TOKEN=Mi4yLjDniYjmnKzkuIDkupvml6DogYrnmoTkurrmj5Dkuoblu7rorq7ljrvmjonpu5jorqTlgLws6Im55LqG \

-e NACOS_AUTH_IDENTITY_KEY=6L+Z5piv56eY6ZKl55qEa2V5 \

-e NACOS_AUTH_IDENTITY_VALUE=6L+Z5piv56eY6ZKl55qEdmFs \

-e PREFER_HOST_MODE=172.30.196.215 \

-e SPRING_DATASOURCE_PLATFORM=mysql \

-e MYSQL_SERVICE_HOST=172.30.196.215 \

-e MYSQL_SERVICE_PORT=3306 \

-e MYSQL_SERVICE_USER=root \

-e MYSQL_SERVICE_PASSWORD=eaglenacos \

-e MYSQL_SERVICE_DB_NAME=nacos_config \

-e TIME_ZONE='Asia/Shanghai' \

--network host \

--name nacos \

--restart=always \

-d nacos/nacos-server:v2.2.3

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

WARNING

2.2.3 版本添加了用户角色权限的控制,默认用户是有权限的,如果新加的用户没有权限会报403

mysql脚本,默认5.7

/*

* Copyright 1999-2018 Alibaba Group Holding Ltd.

*

* Licensed under the Apache License, Version 2.0 (the "License");

* you may not use this file except in compliance with the License.

* You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/

/******************************************/

/* 数据库全名 = nacos_config */

/* 表名称 = config_info */

/******************************************/

CREATE TABLE `config_info` (

`id` bigint(20) NOT NULL AUTO_INCREMENT COMMENT 'id',

`data_id` varchar(255) NOT NULL COMMENT 'data_id',

`group_id` varchar(128) DEFAULT NULL,

`content` longtext NOT NULL COMMENT 'content',

`md5` varchar(32) DEFAULT NULL COMMENT 'md5',

`gmt_create` datetime NOT NULL DEFAULT '2010-05-05 00:00:00' COMMENT '创建时间',

`gmt_modified` datetime NOT NULL DEFAULT '2010-05-05 00:00:00' COMMENT '修改时间',

`src_user` text COMMENT 'source user',

`src_ip` varchar(20) DEFAULT NULL COMMENT 'source ip',

`app_name` varchar(128) DEFAULT NULL,

`tenant_id` varchar(128) DEFAULT '' COMMENT '租户字段',

`c_desc` varchar(256) DEFAULT NULL,

`c_use` varchar(64) DEFAULT NULL,

`effect` varchar(64) DEFAULT NULL,

`type` varchar(64) DEFAULT NULL,

`c_schema` text,

`encrypted_data_key` text NOT NULL COMMENT '密钥',

PRIMARY KEY (`id`),

UNIQUE KEY `uk_configinfo_datagrouptenant` (`data_id`,`group_id`,`tenant_id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8 COLLATE=utf8_bin COMMENT='config_info';

/******************************************/

/* 数据库全名 = nacos_config */

/* 表名称 = config_info_aggr */

/******************************************/

CREATE TABLE `config_info_aggr` (

`id` bigint(20) NOT NULL AUTO_INCREMENT COMMENT 'id',

`data_id` varchar(255) NOT NULL COMMENT 'data_id',

`group_id` varchar(128) NOT NULL COMMENT 'group_id',

`datum_id` varchar(255) NOT NULL COMMENT 'datum_id',

`content` longtext NOT NULL COMMENT '内容',

`gmt_modified` datetime NOT NULL COMMENT '修改时间',

`app_name` varchar(128) DEFAULT NULL,

`tenant_id` varchar(128) DEFAULT '' COMMENT '租户字段',

PRIMARY KEY (`id`),

UNIQUE KEY `uk_configinfoaggr_datagrouptenantdatum` (`data_id`,`group_id`,`tenant_id`,`datum_id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8 COLLATE=utf8_bin COMMENT='增加租户字段';

/******************************************/

/* 数据库全名 = nacos_config */

/* 表名称 = config_info_beta */

/******************************************/

CREATE TABLE `config_info_beta` (

`id` bigint(20) NOT NULL AUTO_INCREMENT COMMENT 'id',

`data_id` varchar(255) NOT NULL COMMENT 'data_id',

`group_id` varchar(128) NOT NULL COMMENT 'group_id',

`app_name` varchar(128) DEFAULT NULL COMMENT 'app_name',

`content` longtext NOT NULL COMMENT 'content',

`beta_ips` varchar(1024) DEFAULT NULL COMMENT 'betaIps',

`md5` varchar(32) DEFAULT NULL COMMENT 'md5',

`gmt_create` datetime NOT NULL DEFAULT '2010-05-05 00:00:00' COMMENT '创建时间',

`gmt_modified` datetime NOT NULL DEFAULT '2010-05-05 00:00:00' COMMENT '修改时间',

`src_user` text COMMENT 'source user',

`src_ip` varchar(20) DEFAULT NULL COMMENT 'source ip',

`tenant_id` varchar(128) DEFAULT '' COMMENT '租户字段',

`encrypted_data_key` text NOT NULL COMMENT '密钥',

PRIMARY KEY (`id`),

UNIQUE KEY `uk_configinfobeta_datagrouptenant` (`data_id`,`group_id`,`tenant_id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8 COLLATE=utf8_bin COMMENT='config_info_beta';

/******************************************/

/* 数据库全名 = nacos_config */

/* 表名称 = config_info_tag */

/******************************************/

CREATE TABLE `config_info_tag` (

`id` bigint(20) NOT NULL AUTO_INCREMENT COMMENT 'id',

`data_id` varchar(255) NOT NULL COMMENT 'data_id',

`group_id` varchar(128) NOT NULL COMMENT 'group_id',

`tenant_id` varchar(128) DEFAULT '' COMMENT 'tenant_id',

`tag_id` varchar(128) NOT NULL COMMENT 'tag_id',

`app_name` varchar(128) DEFAULT NULL COMMENT 'app_name',

`content` longtext NOT NULL COMMENT 'content',

`md5` varchar(32) DEFAULT NULL COMMENT 'md5',

`gmt_create` datetime NOT NULL DEFAULT '2010-05-05 00:00:00' COMMENT '创建时间',

`gmt_modified` datetime NOT NULL DEFAULT '2010-05-05 00:00:00' COMMENT '修改时间',

`src_user` text COMMENT 'source user',

`src_ip` varchar(20) DEFAULT NULL COMMENT 'source ip',

PRIMARY KEY (`id`),

UNIQUE KEY `uk_configinfotag_datagrouptenanttag` (`data_id`,`group_id`,`tenant_id`,`tag_id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8 COLLATE=utf8_bin COMMENT='config_info_tag';

/******************************************/

/* 数据库全名 = nacos_config */

/* 表名称 = config_tags_relation */

/******************************************/

CREATE TABLE `config_tags_relation` (

`id` bigint(20) NOT NULL COMMENT 'id',

`tag_name` varchar(128) NOT NULL COMMENT 'tag_name',

`tag_type` varchar(64) DEFAULT NULL COMMENT 'tag_type',

`data_id` varchar(255) NOT NULL COMMENT 'data_id',

`group_id` varchar(128) NOT NULL COMMENT 'group_id',

`tenant_id` varchar(128) DEFAULT '' COMMENT 'tenant_id',

`nid` bigint(20) NOT NULL AUTO_INCREMENT,

PRIMARY KEY (`nid`),

UNIQUE KEY `uk_configtagrelation_configidtag` (`id`,`tag_name`,`tag_type`),

KEY `idx_tenant_id` (`tenant_id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8 COLLATE=utf8_bin COMMENT='config_tag_relation';

/******************************************/

/* 数据库全名 = nacos_config */

/* 表名称 = group_capacity */

/******************************************/

CREATE TABLE `group_capacity` (

`id` bigint(20) unsigned NOT NULL AUTO_INCREMENT COMMENT '主键ID',

`group_id` varchar(128) NOT NULL DEFAULT '' COMMENT 'Group ID,空字符表示整个集群',

`quota` int(10) unsigned NOT NULL DEFAULT '0' COMMENT '配额,0表示使用默认值',

`usage` int(10) unsigned NOT NULL DEFAULT '0' COMMENT '使用量',

`max_size` int(10) unsigned NOT NULL DEFAULT '0' COMMENT '单个配置大小上限,单位为字节,0表示使用默认值',

`max_aggr_count` int(10) unsigned NOT NULL DEFAULT '0' COMMENT '聚合子配置最大个数,,0表示使用默认值',

`max_aggr_size` int(10) unsigned NOT NULL DEFAULT '0' COMMENT '单个聚合数据的子配置大小上限,单位为字节,0表示使用默认值',

`max_history_count` int(10) unsigned NOT NULL DEFAULT '0' COMMENT '最大变更历史数量',

`gmt_create` datetime NOT NULL DEFAULT '2010-05-05 00:00:00' COMMENT '创建时间',

`gmt_modified` datetime NOT NULL DEFAULT '2010-05-05 00:00:00' COMMENT '修改时间',

PRIMARY KEY (`id`),

UNIQUE KEY `uk_group_id` (`group_id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8 COLLATE=utf8_bin COMMENT='集群、各Group容量信息表';

/******************************************/

/* 数据库全名 = nacos_config */

/* 表名称 = his_config_info */

/******************************************/

CREATE TABLE `his_config_info` (

`id` bigint(64) unsigned NOT NULL,

`nid` bigint(20) unsigned NOT NULL AUTO_INCREMENT,

`data_id` varchar(255) NOT NULL,

`group_id` varchar(128) NOT NULL,

`app_name` varchar(128) DEFAULT NULL COMMENT 'app_name',

`content` longtext NOT NULL,

`md5` varchar(32) DEFAULT NULL,

`gmt_create` datetime NOT NULL DEFAULT '2010-05-05 00:00:00',

`gmt_modified` datetime NOT NULL DEFAULT '2010-05-05 00:00:00',

`src_user` text,

`src_ip` varchar(20) DEFAULT NULL,

`op_type` char(10) DEFAULT NULL,

`tenant_id` varchar(128) DEFAULT '' COMMENT '租户字段',

`encrypted_data_key` text NOT NULL COMMENT '密钥',

PRIMARY KEY (`nid`),

KEY `idx_gmt_create` (`gmt_create`),

KEY `idx_gmt_modified` (`gmt_modified`),

KEY `idx_did` (`data_id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8 COLLATE=utf8_bin COMMENT='多租户改造';

/******************************************/

/* 数据库全名 = nacos_config */

/* 表名称 = tenant_capacity */

/******************************************/

CREATE TABLE `tenant_capacity` (

`id` bigint(20) unsigned NOT NULL AUTO_INCREMENT COMMENT '主键ID',

`tenant_id` varchar(128) NOT NULL DEFAULT '' COMMENT 'Tenant ID',

`quota` int(10) unsigned NOT NULL DEFAULT '0' COMMENT '配额,0表示使用默认值',

`usage` int(10) unsigned NOT NULL DEFAULT '0' COMMENT '使用量',

`max_size` int(10) unsigned NOT NULL DEFAULT '0' COMMENT '单个配置大小上限,单位为字节,0表示使用默认值',

`max_aggr_count` int(10) unsigned NOT NULL DEFAULT '0' COMMENT '聚合子配置最大个数',

`max_aggr_size` int(10) unsigned NOT NULL DEFAULT '0' COMMENT '单个聚合数据的子配置大小上限,单位为字节,0表示使用默认值',

`max_history_count` int(10) unsigned NOT NULL DEFAULT '0' COMMENT '最大变更历史数量',

`gmt_create` datetime NOT NULL DEFAULT '2010-05-05 00:00:00' COMMENT '创建时间',

`gmt_modified` datetime NOT NULL DEFAULT '2010-05-05 00:00:00' COMMENT '修改时间',

PRIMARY KEY (`id`),

UNIQUE KEY `uk_tenant_id` (`tenant_id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8 COLLATE=utf8_bin COMMENT='租户容量信息表';

CREATE TABLE `tenant_info` (

`id` bigint(20) NOT NULL AUTO_INCREMENT COMMENT 'id',

`kp` varchar(128) NOT NULL COMMENT 'kp',

`tenant_id` varchar(128) default '' COMMENT 'tenant_id',

`tenant_name` varchar(128) default '' COMMENT 'tenant_name',

`tenant_desc` varchar(256) DEFAULT NULL COMMENT 'tenant_desc',

`create_source` varchar(32) DEFAULT NULL COMMENT 'create_source',

`gmt_create` bigint(20) NOT NULL COMMENT '创建时间',

`gmt_modified` bigint(20) NOT NULL COMMENT '修改时间',

PRIMARY KEY (`id`),

UNIQUE KEY `uk_tenant_info_kptenantid` (`kp`,`tenant_id`),

KEY `idx_tenant_id` (`tenant_id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8 COLLATE=utf8_bin COMMENT='tenant_info';

CREATE TABLE users (

username varchar(50) NOT NULL PRIMARY KEY,

password varchar(500) NOT NULL,

enabled boolean NOT NULL

);

CREATE TABLE roles (

username varchar(50) NOT NULL,

role varchar(50) NOT NULL,

constraint uk_username_role UNIQUE (username,role)

);

CREATE TABLE permissions (

role varchar(50) NOT NULL,

resource varchar(512) NOT NULL,

action varchar(8) NOT NULL,

constraint uk_role_permission UNIQUE (role,resource,action)

);

INSERT INTO users (username, password, enabled) VALUES ('nacos', '$2a$10$EuWPZHzz32dJN7jexM34MOeYirDdFAZm2kuWj7VEOJhhZkDrxfvUu', TRUE);

INSERT INTO roles (username, role) VALUES ('nacos', 'ROLE_ADMIN');

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

216

217

218

219

220

221

# xxljob(2.4.0)

docker run --network host \

--restart=always -d \

-e PARAMS="--spring.datasource.url=jdbc:mysql://127.0.0.1:3306/xxl_job?Unicode=true&characterEncoding=UTF-8 --spring.datasource.username=root --spring.datasource.password=123456 --xxl.job.accessToken=singleauthtoken" \

--name xxl-job-admin \

xuxueli/xxl-job-admin:2.4.0

2

3

4

5

默认账号密码:

admin

123456

控制台:

http://172.30.196.141:8080/xxl-job-admin/

2

3

4

5

#

# XXL-JOB v2.4.0

# Copyright (c) 2015-present, xuxueli.

CREATE database if NOT EXISTS `xxl_job` default character set utf8mb4 collate utf8mb4_unicode_ci;

use `xxl_job`;

SET NAMES utf8mb4;

CREATE TABLE `xxl_job_info` (

`id` int(11) NOT NULL AUTO_INCREMENT,

`job_group` int(11) NOT NULL COMMENT '执行器主键ID',

`job_desc` varchar(255) NOT NULL,

`add_time` datetime DEFAULT NULL,

`update_time` datetime DEFAULT NULL,

`author` varchar(64) DEFAULT NULL COMMENT '作者',

`alarm_email` varchar(255) DEFAULT NULL COMMENT '报警邮件',

`schedule_type` varchar(50) NOT NULL DEFAULT 'NONE' COMMENT '调度类型',

`schedule_conf` varchar(128) DEFAULT NULL COMMENT '调度配置,值含义取决于调度类型',

`misfire_strategy` varchar(50) NOT NULL DEFAULT 'DO_NOTHING' COMMENT '调度过期策略',

`executor_route_strategy` varchar(50) DEFAULT NULL COMMENT '执行器路由策略',

`executor_handler` varchar(255) DEFAULT NULL COMMENT '执行器任务handler',

`executor_param` varchar(512) DEFAULT NULL COMMENT '执行器任务参数',

`executor_block_strategy` varchar(50) DEFAULT NULL COMMENT '阻塞处理策略',

`executor_timeout` int(11) NOT NULL DEFAULT '0' COMMENT '任务执行超时时间,单位秒',

`executor_fail_retry_count` int(11) NOT NULL DEFAULT '0' COMMENT '失败重试次数',

`glue_type` varchar(50) NOT NULL COMMENT 'GLUE类型',

`glue_source` mediumtext COMMENT 'GLUE源代码',

`glue_remark` varchar(128) DEFAULT NULL COMMENT 'GLUE备注',

`glue_updatetime` datetime DEFAULT NULL COMMENT 'GLUE更新时间',

`child_jobid` varchar(255) DEFAULT NULL COMMENT '子任务ID,多个逗号分隔',

`trigger_status` tinyint(4) NOT NULL DEFAULT '0' COMMENT '调度状态:0-停止,1-运行',

`trigger_last_time` bigint(13) NOT NULL DEFAULT '0' COMMENT '上次调度时间',

`trigger_next_time` bigint(13) NOT NULL DEFAULT '0' COMMENT '下次调度时间',

PRIMARY KEY (`id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4;

CREATE TABLE `xxl_job_log` (

`id` bigint(20) NOT NULL AUTO_INCREMENT,

`job_group` int(11) NOT NULL COMMENT '执行器主键ID',

`job_id` int(11) NOT NULL COMMENT '任务,主键ID',

`executor_address` varchar(255) DEFAULT NULL COMMENT '执行器地址,本次执行的地址',

`executor_handler` varchar(255) DEFAULT NULL COMMENT '执行器任务handler',

`executor_param` varchar(512) DEFAULT NULL COMMENT '执行器任务参数',

`executor_sharding_param` varchar(20) DEFAULT NULL COMMENT '执行器任务分片参数,格式如 1/2',

`executor_fail_retry_count` int(11) NOT NULL DEFAULT '0' COMMENT '失败重试次数',

`trigger_time` datetime DEFAULT NULL COMMENT '调度-时间',

`trigger_code` int(11) NOT NULL COMMENT '调度-结果',

`trigger_msg` text COMMENT '调度-日志',

`handle_time` datetime DEFAULT NULL COMMENT '执行-时间',

`handle_code` int(11) NOT NULL COMMENT '执行-状态',

`handle_msg` text COMMENT '执行-日志',

`alarm_status` tinyint(4) NOT NULL DEFAULT '0' COMMENT '告警状态:0-默认、1-无需告警、2-告警成功、3-告警失败',

PRIMARY KEY (`id`),

KEY `I_trigger_time` (`trigger_time`),

KEY `I_handle_code` (`handle_code`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4;

CREATE TABLE `xxl_job_log_report` (

`id` int(11) NOT NULL AUTO_INCREMENT,

`trigger_day` datetime DEFAULT NULL COMMENT '调度-时间',

`running_count` int(11) NOT NULL DEFAULT '0' COMMENT '运行中-日志数量',

`suc_count` int(11) NOT NULL DEFAULT '0' COMMENT '执行成功-日志数量',

`fail_count` int(11) NOT NULL DEFAULT '0' COMMENT '执行失败-日志数量',

`update_time` datetime DEFAULT NULL,

PRIMARY KEY (`id`),

UNIQUE KEY `i_trigger_day` (`trigger_day`) USING BTREE

) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4;

CREATE TABLE `xxl_job_logglue` (

`id` int(11) NOT NULL AUTO_INCREMENT,

`job_id` int(11) NOT NULL COMMENT '任务,主键ID',

`glue_type` varchar(50) DEFAULT NULL COMMENT 'GLUE类型',

`glue_source` mediumtext COMMENT 'GLUE源代码',

`glue_remark` varchar(128) NOT NULL COMMENT 'GLUE备注',

`add_time` datetime DEFAULT NULL,

`update_time` datetime DEFAULT NULL,

PRIMARY KEY (`id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4;

CREATE TABLE `xxl_job_registry` (

`id` int(11) NOT NULL AUTO_INCREMENT,

`registry_group` varchar(50) NOT NULL,

`registry_key` varchar(255) NOT NULL,

`registry_value` varchar(255) NOT NULL,

`update_time` datetime DEFAULT NULL,

PRIMARY KEY (`id`),

KEY `i_g_k_v` (`registry_group`,`registry_key`,`registry_value`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4;

CREATE TABLE `xxl_job_group` (

`id` int(11) NOT NULL AUTO_INCREMENT,

`app_name` varchar(64) NOT NULL COMMENT '执行器AppName',

`title` varchar(12) NOT NULL COMMENT '执行器名称',

`address_type` tinyint(4) NOT NULL DEFAULT '0' COMMENT '执行器地址类型:0=自动注册、1=手动录入',

`address_list` text COMMENT '执行器地址列表,多地址逗号分隔',

`update_time` datetime DEFAULT NULL,

PRIMARY KEY (`id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4;

CREATE TABLE `xxl_job_user` (

`id` int(11) NOT NULL AUTO_INCREMENT,

`username` varchar(50) NOT NULL COMMENT '账号',

`password` varchar(50) NOT NULL COMMENT '密码',

`role` tinyint(4) NOT NULL COMMENT '角色:0-普通用户、1-管理员',

`permission` varchar(255) DEFAULT NULL COMMENT '权限:执行器ID列表,多个逗号分割',

PRIMARY KEY (`id`),

UNIQUE KEY `i_username` (`username`) USING BTREE

) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4;

CREATE TABLE `xxl_job_lock` (

`lock_name` varchar(50) NOT NULL COMMENT '锁名称',

PRIMARY KEY (`lock_name`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4;

INSERT INTO `xxl_job_group`(`id`, `app_name`, `title`, `address_type`, `address_list`, `update_time`) VALUES (1, 'xxl-job-executor-sample', '示例执行器', 0, NULL, '2018-11-03 22:21:31' );

INSERT INTO `xxl_job_info`(`id`, `job_group`, `job_desc`, `add_time`, `update_time`, `author`, `alarm_email`, `schedule_type`, `schedule_conf`, `misfire_strategy`, `executor_route_strategy`, `executor_handler`, `executor_param`, `executor_block_strategy`, `executor_timeout`, `executor_fail_retry_count`, `glue_type`, `glue_source`, `glue_remark`, `glue_updatetime`, `child_jobid`) VALUES (1, 1, '测试任务1', '2018-11-03 22:21:31', '2018-11-03 22:21:31', 'XXL', '', 'CRON', '0 0 0 * * ? *', 'DO_NOTHING', 'FIRST', 'demoJobHandler', '', 'SERIAL_EXECUTION', 0, 0, 'BEAN', '', 'GLUE代码初始化', '2018-11-03 22:21:31', '');

INSERT INTO `xxl_job_user`(`id`, `username`, `password`, `role`, `permission`) VALUES (1, 'admin', 'e10adc3949ba59abbe56e057f20f883e', 1, NULL);

INSERT INTO `xxl_job_lock` ( `lock_name`) VALUES ( 'schedule_lock');

commit;

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

# seata(1.6.1)

1、下载docker镜像

2、创建seata数据库seatadb,并运行sql脚本

下一步需要用到

/*

Navicat Premium Data Transfer

Source Server : 172.30.196.141

Source Server Type : MySQL

Source Server Version : 50736 (5.7.36)

Source Host : 172.30.196.141:3306

Source Schema : seatadb

Target Server Type : MySQL

Target Server Version : 50736 (5.7.36)

File Encoding : 65001

Date: 17/10/2023 14:41:26

*/

SET NAMES utf8mb4;

SET FOREIGN_KEY_CHECKS = 0;

-- ----------------------------

-- Table structure for branch_table

-- ----------------------------

DROP TABLE IF EXISTS `branch_table`;

CREATE TABLE `branch_table` (

`branch_id` bigint(20) NOT NULL,

`xid` varchar(128) CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci NOT NULL,

`transaction_id` bigint(20) NULL DEFAULT NULL,

`resource_group_id` varchar(32) CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci NULL DEFAULT NULL,

`resource_id` varchar(256) CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci NULL DEFAULT NULL,

`branch_type` varchar(8) CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci NULL DEFAULT NULL,

`status` tinyint(4) NULL DEFAULT NULL,

`client_id` varchar(64) CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci NULL DEFAULT NULL,

`application_data` varchar(2000) CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci NULL DEFAULT NULL,

`gmt_create` datetime(6) NULL DEFAULT NULL,

`gmt_modified` datetime(6) NULL DEFAULT NULL,

PRIMARY KEY (`branch_id`) USING BTREE,

INDEX `idx_xid`(`xid`) USING BTREE

) ENGINE = InnoDB CHARACTER SET = utf8mb4 COLLATE = utf8mb4_general_ci ROW_FORMAT = Dynamic;

-- ----------------------------

-- Records of branch_table

-- ----------------------------

-- ----------------------------

-- Table structure for distributed_lock

-- ----------------------------

DROP TABLE IF EXISTS `distributed_lock`;

CREATE TABLE `distributed_lock` (

`lock_key` char(20) CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci NOT NULL,

`lock_value` varchar(20) CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci NOT NULL,

`expire` bigint(20) NULL DEFAULT NULL,

PRIMARY KEY (`lock_key`) USING BTREE

) ENGINE = InnoDB CHARACTER SET = utf8mb4 COLLATE = utf8mb4_general_ci ROW_FORMAT = Dynamic;

-- ----------------------------

-- Records of distributed_lock

-- ----------------------------

INSERT INTO `distributed_lock` VALUES ('AsyncCommitting', ' ', 0);

INSERT INTO `distributed_lock` VALUES ('RetryCommitting', ' ', 0);

INSERT INTO `distributed_lock` VALUES ('RetryRollbacking', ' ', 0);

INSERT INTO `distributed_lock` VALUES ('TxTimeoutCheck', ' ', 0);

INSERT INTO `distributed_lock` VALUES ('UndologDelete', ' ', 0);

-- ----------------------------

-- Table structure for global_table

-- ----------------------------

DROP TABLE IF EXISTS `global_table`;

CREATE TABLE `global_table` (

`xid` varchar(128) CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci NOT NULL,

`transaction_id` bigint(20) NULL DEFAULT NULL,

`status` tinyint(4) NOT NULL,

`application_id` varchar(32) CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci NULL DEFAULT NULL,

`transaction_service_group` varchar(32) CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci NULL DEFAULT NULL,

`transaction_name` varchar(128) CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci NULL DEFAULT NULL,

`timeout` int(11) NULL DEFAULT NULL,

`begin_time` bigint(20) NULL DEFAULT NULL,

`application_data` varchar(2000) CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci NULL DEFAULT NULL,

`gmt_create` datetime NULL DEFAULT NULL,

`gmt_modified` datetime NULL DEFAULT NULL,

PRIMARY KEY (`xid`) USING BTREE,

INDEX `idx_status_gmt_modified`(`status`, `gmt_modified`) USING BTREE,

INDEX `idx_transaction_id`(`transaction_id`) USING BTREE

) ENGINE = InnoDB CHARACTER SET = utf8mb4 COLLATE = utf8mb4_general_ci ROW_FORMAT = Dynamic;

-- ----------------------------

-- Records of global_table

-- ----------------------------

-- ----------------------------

-- Table structure for lock_table

-- ----------------------------

DROP TABLE IF EXISTS `lock_table`;

CREATE TABLE `lock_table` (

`row_key` varchar(128) CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci NOT NULL,

`xid` varchar(128) CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci NULL DEFAULT NULL,

`transaction_id` bigint(20) NULL DEFAULT NULL,

`branch_id` bigint(20) NOT NULL,

`resource_id` varchar(256) CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci NULL DEFAULT NULL,

`table_name` varchar(32) CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci NULL DEFAULT NULL,

`pk` varchar(36) CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci NULL DEFAULT NULL,

`status` tinyint(4) NOT NULL DEFAULT 0 COMMENT '0:locked ,1:rollbacking',

`gmt_create` datetime NULL DEFAULT NULL,

`gmt_modified` datetime NULL DEFAULT NULL,

PRIMARY KEY (`row_key`) USING BTREE,

INDEX `idx_status`(`status`) USING BTREE,

INDEX `idx_branch_id`(`branch_id`) USING BTREE,

INDEX `idx_xid`(`xid`) USING BTREE

) ENGINE = InnoDB CHARACTER SET = utf8mb4 COLLATE = utf8mb4_general_ci ROW_FORMAT = Dynamic;

-- ----------------------------

-- Records of lock_table

-- ----------------------------

SET FOREIGN_KEY_CHECKS = 1;

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

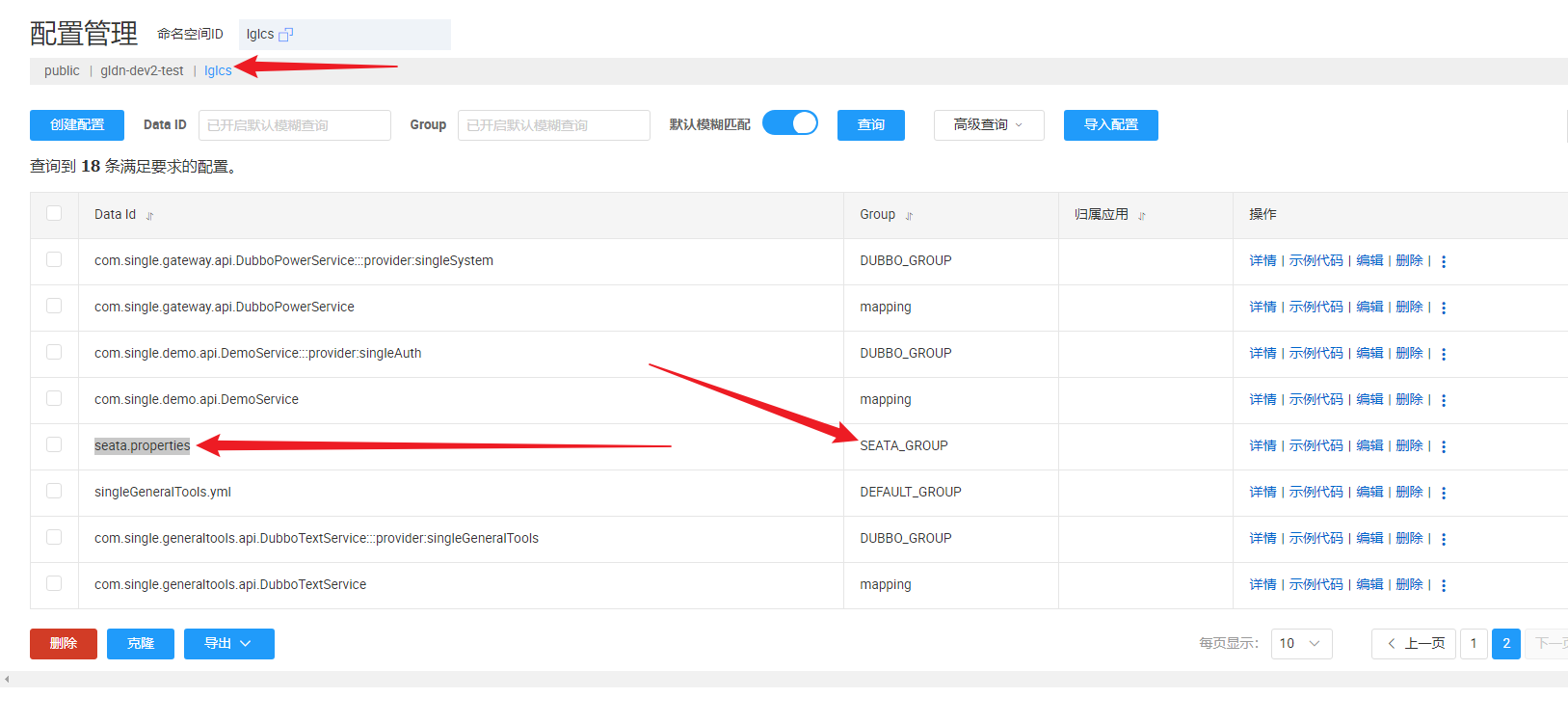

3、在nacos中创建seata配置

记得修改配置文件中的数据库账号和连接密码

# 分组信息

# dataid

# seata.properties

# group

# SEATA_GROUP

#For details about configuration items, see https://seata.io/zh-cn/docs/user/configurations.html

#Transport configuration, for client and server

transport.type=TCP

transport.server=NIO

transport.heartbeat=true

transport.enableTmClientBatchSendRequest=false

transport.enableRmClientBatchSendRequest=true

transport.enableTcServerBatchSendResponse=false

transport.rpcRmRequestTimeout=30000

transport.rpcTmRequestTimeout=30000

transport.rpcTcRequestTimeout=30000

transport.threadFactory.bossThreadPrefix=NettyBoss

transport.threadFactory.workerThreadPrefix=NettyServerNIOWorker

transport.threadFactory.serverExecutorThreadPrefix=NettyServerBizHandler

transport.threadFactory.shareBossWorker=false

transport.threadFactory.clientSelectorThreadPrefix=NettyClientSelector

transport.threadFactory.clientSelectorThreadSize=1

transport.threadFactory.clientWorkerThreadPrefix=NettyClientWorkerThread

transport.threadFactory.bossThreadSize=1

transport.threadFactory.workerThreadSize=default

transport.shutdown.wait=3

transport.serialization=seata

transport.compressor=none

#Transaction routing rules configuration, only for the client

# 默认事务分组,要和c端配置一样,建议使用默认

service.vgroupMapping.default_tx_group=default

#If you use a registry, you can ignore it

service.default.grouplist=127.0.0.1:8091

service.enableDegrade=false

service.disableGlobalTransaction=false

#Transaction rule configuration, only for the client

client.rm.asyncCommitBufferLimit=10000

client.rm.lock.retryInterval=10

client.rm.lock.retryTimes=30

client.rm.lock.retryPolicyBranchRollbackOnConflict=true

client.rm.reportRetryCount=5

client.rm.tableMetaCheckEnable=true

client.rm.tableMetaCheckerInterval=60000

client.rm.sqlParserType=druid

client.rm.reportSuccessEnable=false

client.rm.sagaBranchRegisterEnable=false

client.rm.sagaJsonParser=fastjson

client.rm.tccActionInterceptorOrder=-2147482648

client.tm.commitRetryCount=5

client.tm.rollbackRetryCount=5

client.tm.defaultGlobalTransactionTimeout=60000

client.tm.degradeCheck=false

client.tm.degradeCheckAllowTimes=10

client.tm.degradeCheckPeriod=2000

client.tm.interceptorOrder=-2147482648

client.undo.dataValidation=true

client.undo.logSerialization=jackson

client.undo.onlyCareUpdateColumns=true

server.undo.logSaveDays=7

server.undo.logDeletePeriod=86400000

client.undo.logTable=undo_log

client.undo.compress.enable=true

client.undo.compress.type=zip

client.undo.compress.threshold=64k

#For TCC transaction mode

tcc.fence.logTableName=tcc_fence_log

tcc.fence.cleanPeriod=1h

#Log rule configuration, for client and server

log.exceptionRate=100

#Transaction storage configuration, only for the server. The file, db, and redis configuration values are optional.

store.mode=db

store.lock.mode=db

store.session.mode=db

#Used for password encryption

store.publicKey=

#If `store.mode,store.lock.mode,store.session.mode` are not equal to `file`, you can remove the configuration block.

store.file.dir=file_store/data

store.file.maxBranchSessionSize=16384

store.file.maxGlobalSessionSize=512

store.file.fileWriteBufferCacheSize=16384

store.file.flushDiskMode=async

store.file.sessionReloadReadSize=100

#These configurations are required if the `store mode` is `db`. If `store.mode,store.lock.mode,store.session.mode` are not equal to `db`, you can remove the configuration block.

store.db.datasource=druid

store.db.dbType=mysql

#store.db.driverClassName=com.mysql.cj.jdbc.Driver

# 屏蔽8版本的驱动,使用5.7版本驱动

store.db.driverClassName=com.mysql.jdbc.Driver

# 修改数据库里的连接账号和密码还有连接地址

store.db.url=jdbc:mysql://121.43.149.217:3306/seatadb?rewriteBatchedStatements=true&serverTimezone=Asia/Shanghai

store.db.user=root

store.db.password=123456liguanglong

store.db.minConn=5

store.db.maxConn=30

store.db.globalTable=global_table

store.db.branchTable=branch_table

store.db.distributedLockTable=distributed_lock

store.db.queryLimit=100

store.db.lockTable=lock_table

store.db.maxWait=5000

#These configurations are required if the `store mode` is `redis`. If `store.mode,store.lock.mode,store.session.mode` are not equal to `redis`, you can remove the configuration block.

store.redis.mode=single

store.redis.single.host=127.0.0.1

store.redis.single.port=6379

store.redis.sentinel.masterName=

store.redis.sentinel.sentinelHosts=

store.redis.maxConn=10

store.redis.minConn=1

store.redis.maxTotal=100

store.redis.database=0

store.redis.password=

store.redis.queryLimit=100

#Transaction rule configuration, only for the server

server.recovery.committingRetryPeriod=1000

server.recovery.asynCommittingRetryPeriod=1000

server.recovery.rollbackingRetryPeriod=1000

server.recovery.timeoutRetryPeriod=1000

server.maxCommitRetryTimeout=-1

server.maxRollbackRetryTimeout=-1

server.rollbackRetryTimeoutUnlockEnable=false

server.distributedLockExpireTime=10000

server.xaerNotaRetryTimeout=60000

server.session.branchAsyncQueueSize=5000

server.session.enableBranchAsyncRemove=false

server.enableParallelRequestHandle=false

#Metrics configuration, only for the server

metrics.enabled=false

metrics.registryType=compact

metrics.exporterList=prometheus

metrics.exporterPrometheusPort=9898

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

4、修改seataServer配置文件(application.yml)并挂载,然后启动docker镜像

先在电脑上创建application.yml配置文件,然后在创建docker,创建后查询nacos中是否注册成功

server:

port: 7091

spring:

application:

name: seata-server

logging:

config: classpath:logback-spring.xml

file:

path: ${user.home}/logs/seata

extend:

logstash-appender:

destination: 127.0.0.1:4560

kafka-appender:

bootstrap-servers: 127.0.0.1:9092

topic: logback_to_logstash

console:

user:

username: seata

# 登录密码

password: seata@seata123

seata:

config:

# support: nacos, consul, apollo, zk, etcd3

type: nacos

nacos:

server-addr: 114.132.252.197:8848

# 命名空间

namespace: singlekj

group: SEATA_GROUP

# nacos账号密码

username: nacos

password: lglnacos@123

data-id: seata.properties

registry:

# support: nacos, eureka, redis, zk, consul, etcd3, sofa

type: nacos

nacos:

application: seata-server

server-addr: 114.132.252.197:8848

group: SEATA_GROUP

# 命名空间

namespace: singlekj

# nacos账号密码

username: nacos

password: lglnacos@123

cluster: default

# server:

# service-port: 8091 #If not configured, the default is '${server.port} + 1000'

security:

secretKey: SeataSecretKey0c382ef121d778043159209298fd40bf3850a017

tokenValidityInMilliseconds: 1800000

ignore:

urls: /,/**/*.css,/**/*.js,/**/*.html,/**/*.map,/**/*.svg,/**/*.png,/**/*.ico,/console-fe/public/**,/api/v1/auth/login

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

docker run --name seata-server --network host -v /data/server/seataServer/application.yml:/seata-server/resources/application.yml seataio/seata-server:1.6.1

访问http://localhost:7091,用户名密码:seata/seata

5、每个业务库添加 undo_log 表

CREATE TABLE `undo_log` (

`id` bigint(20) NOT NULL AUTO_INCREMENT,

`branch_id` bigint(20) NOT NULL,

`xid` varchar(100) NOT NULL,

`context` varchar(128) NOT NULL,

`rollback_info` longblob NOT NULL,

`log_status` int(11) NOT NULL,

`log_created` datetime NOT NULL,

`log_modified` datetime NOT NULL,

`ext` varchar(100) DEFAULT NULL,

PRIMARY KEY (`id`),

UNIQUE KEY `ux_undo_log` (`xid`,`branch_id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4;

2

3

4

5

6

7

8

9

10

11

12

13

14

6、 被调用的方法增加“@Transactional”,只有发起者增加“@GlobalTransactional”

7、 java程序添加配置文件

---

# Seata的注册和配置中心

seata:

enabled: true

application-id: ${spring.application.name}

# 事务分组, 与nacos配置文件中的一致

tx-service-group: default_tx_group

service:

vgroup-mapping:

# 与nacos中一致

default_tx_group: default

registry:

type: nacos

nacos:

# 应与seata-server实际注册的服务名一致

application: seata-server

server-addr: ${spring.cloud.nacos.server-addr}

namespace: @profiles.active@

group: SEATA_GROUP

username: @nacos.username@

password: @nacos.password@

config:

type: nacos

nacos:

server-addr: ${spring.cloud.nacos.server-addr}

namespace: @profiles.active@

group: SEATA_GROUP

username: @nacos.username@

password: @nacos.password@

data-id: seata.properties

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

# 性能监控

docker run --rm -ti --name=ctop --volume /var/run/docker.sock:/var/run/docker.sock:ro quay.io/vektorlab/ctop:latest